LLM Router

The LLM Router component routes requests to the most appropriate LLM based on OpenRouter model specifications.

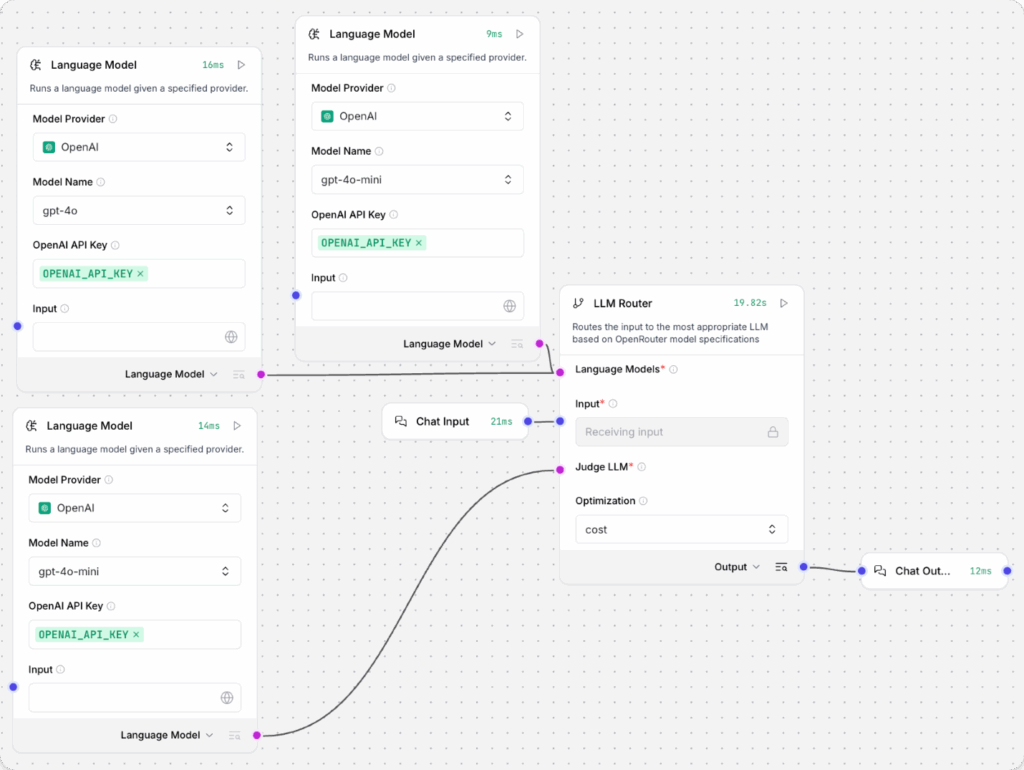

To use the component in a flow, you connect multiple Language Model components to the LLM Router components. One model is the judge LLM that analyzes input messages to understand the evaluation context, selects the most appropriate model from the other attached LLMs, and then routes the input to the selected model. The selected model processes the input and then returns the generated response.

The following example flow has three Language Model components. One is the judge LLM, and the other two are in the LLM pool for request routing. The Chat Input and Chat Output components create a seamless chat interaction where you send a message and receive a response without any user awareness of the underlying routing.

LLM Router parameters

Some LLM Router component input parameters are hidden by default in the visual editor. You can toggle parameters through the Controls in the component’s header menu.

| Name | Display Name | Info |

|---|---|---|

| models | Language Models | Input parameter. Connect LanguageModel output from multiple Language Model components to create a pool of models. The judge_llm selects models from this pool when routing requests. The first model you connect is the default model if there is a problem with model selection or routing. |

| input_value | Input | Input parameter. The incoming query to be routed to the model selected by the judge LLM. |

| judge_llm | Judge LLM | Input parameter. Connect LanguageModel output from one Language Model component to serve as the judge LLM for request routing. |

| optimization | Optimization | Input parameter. Set a preferred characteristic for model selection by the judge LLM. The options are quality (highest response quality), speed (fastest response time), cost (most cost-effective model), or balance (equal weight for quality, speed, and cost). Default: balanced |

| use_openrouter_specs | Use OpenRouter Specs | Input parameter. Whether to fetch model specifications from the OpenRouter API. If false, only the model name is provided to the judge LLM. Default: Enabled (true) |

| timeout | API Timeout | Input parameter. Set a timeout duration in seconds for API requests made by the router. Default: 10 |

| fallback_to_first | Fallback to First Model | Input parameter. Whether to use the first LLM in models as a backup if routing fails to reach the selected model. Default: Enabled (true) |

LLM Router outputs

The LLM Router component provides three output options. You can set the desired output type near the component’s output port.

a. Output: A Message containing the response to the original query as generated by the selected LLM. Use this output for regular chat interactions.

b. Selected Model Info: A Data object containing information about the selected model, such as its name and version.

c. Routing Decision: A Message containing the judge model’s reasoning for selecting a particular model, including input query length and number of models considered. For example:

Model Selection Decision:

– Selected Model Index: 0

– Selected Robility flow Model Name: gpt-4o-mini

– Selected API Model ID (if resolved): openai/gpt-4o-mini

– Optimization Preference: cost

– Input Query Length: 27 characters (~5 tokens)

– Number of Models Considered: 2

– Specifications Source: OpenRouter API

This is useful for debugging if you feel the judge model isn’t selecting the best model.