Vector Stores

Robility flow’s Vector Store components are used to read and write vector data, including embedding storage, vector search, Graph RAG traversals, and specialized provider-specific search, such as OpenSearch, Elasticsearch, and Vectara.

These components are critical for vector search applications, such as Retrieval Augmented Generation (RAG) chatbots that need to retrieve relevant context from large datasets.

Most of these components connect to a specific vector database provider, but some components support multiple providers or platforms. For example, the Cassandra vector store component can connect to self-managed Apache Cassandra-based clusters as well as Astra DB, which is a managed Cassandra DBaaS.

Other types of storage, like traditional structured databases and chat memory, are handled through other components like the SQL Database component or the Message History component.

Use Vector Store components in a flow

Points to note

For a tutorial using Vector Store components in a flow, see Create a vector RAG chatbot.

The following steps introduce the use of Vector Store components in a flow, including configuration details, how the components work when you run a flow, why you might need multiple Vector Store components in one flow, and useful supporting components, such as Embedding Model and Parser components.

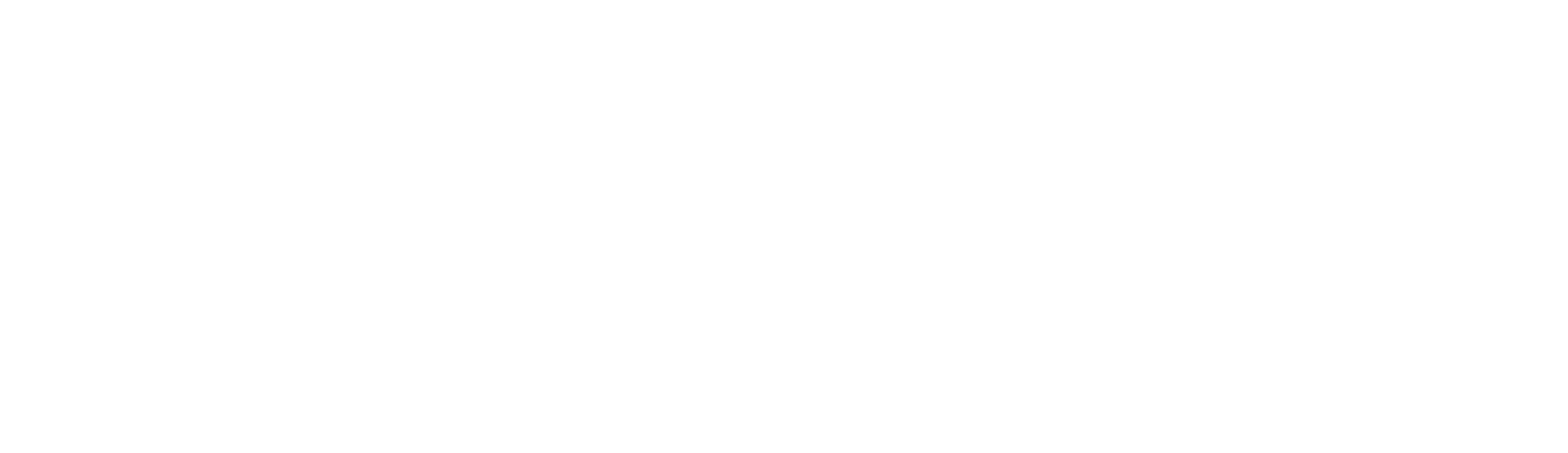

1. Create a flow with the Vector Store RAG template.

This template has two subflows. The Load Data subflow loads embeddings and content into a vector database, and the Retriever subflow runs a vector search to retrieve relevant context based on a user’s query.

2. Configure the database connection for both Astra DB components, or replace them with another pair of Vector Store components of your choice. Make sure the components connect to the same vector store, and that the component in the Retriever subflow is able to run a similarity search.

The parameters you set in each Vector Store component depend on the component’s role in your flow. In this example, the Load Data subflow writes to the vector store, whereas the Retriever subflow reads from the vector store. Therefore, search-related parameters are only relevant to the Vector Search component in the Retriever subflow.

For information about specific configuration parameters, see the section of this page for your chosen Vector Store component and Hidden parameters.

3. To configure the embedding model, do one of the following:

a. Use an OpenAI model: In both OpenAI Embeddings components, enter your OpenAI API key. You can use the default model or select a different OpenAI embedding model.

b. Use another provider: Replace the OpenAI Embeddings components with another pair of Embedding Model component of your choice, and then configure the parameters and credentials accordingly.

c. Use Astra DB vectorize: If you are using an Astra DB vector store that has a vectorize integration, you can remove both OpenAI Embeddings components. If you do this, the vectorize integration automatically generates embedding from the Ingest Data (in the Load Data subflow) and Search Query (in the Retriever subflow).

Tips

If your vector store already contains embeddings, make sure your Embedding Model components use the same model as your previous embeddings. Mixing embedding models in the same vector store can produce inaccurate search results.

4. Recommended: In the Split Text component, optimize the chunking settings for your embedding model. For example, if your embedding model has a token limit of 512, then the Chunk Size parameter must not exceed that limit.

Additionally, because the Retriever subflow passes the chat input directly to the Vector Store component for vector search, make sure that your chat input string doesn’t exceed your embedding model’s limits. For this example, you can enter a query that is within the limits; however, in a production environment, you might need to implement additional checks or preprocessing steps to ensure compliance. For example, use additional components to prepare the chat input before running the vector search, or enforce chat input limits in your application code.

5. In the Language Model component, enter your OpenAI API key, or select a different provider and model to use for the chat portion of the flow.

6. Run the Load Data subflow to populate your vector store. In the File component, select one or more files, and then click Run component on the Vector Store component in the Load Data subflow.

The Load Data subflow loads files from your local machine, chunks them, generates embeddings for the chunks, and then stores the chunks and their embeddings in the vector database.

The Load Data subflow is separate from the Retriever subflow because you probably won’t run it every time you use the chat. You can run the Load Data subflow as needed to preload or update the data in your vector store. Then, your chat interactions only use the components that are necessary for chat.

If your vector store already contains data that you want to use for vector search, then you don’t need to run the Load Data subflow.

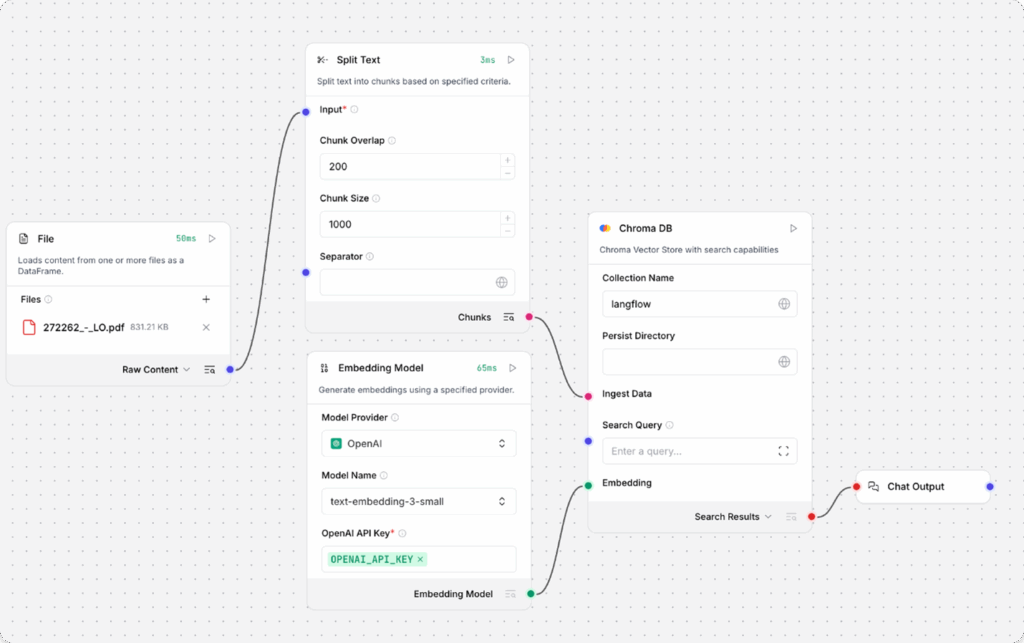

7. Open the Playground and start chatting to run the Retriever subflow.

The Retriever subflow generates an embedding from chat input, runs a vector search to retrieve similar content from your vector store, parses the search results into supplemental context for the LLM, and then uses the LLM to generate a natural language response to your query. The LLM uses the vector search results along with its internal training data and tools, such as basic web search and datetime information, to produce the response.

To avoid passing the entire block of raw search results to the LLM, the Parser component extracts text strings from the search results Data object and then passes them to the Prompt Template component in Message format. From there, the strings and other template content are compiled into natural language instructions for the LLM.

You can use other components for this transformation, such as the Data Operations component, depending on how you want to use the search results.

To view the raw search results, click Inspect output on the Vector Store component after running the Retriever subflow.

Hidden parameters

You can inspect a Vector Store component’s parameter to learn more about the inputs it accepts, the features it supports, and how to configure it.

Many input parameters for Vector Store components are hidden by default in the visual editor. You can toggle parameters through the Controls in each component’s header menu.

Some parameters are conditional, and they are only available after you set other parameters or select specific options for other parameters. Conditional parameters may not be visible on the Controls pane until you set the required dependencies. However, all parameters are always listed in a component’s code.

For information about a specific component’s parameters, see the provider’s documentation and the component details.

Search results output

If you use a Vector Store component to query your vector store, it produces search results that you can pass to downstream components in your flow as a list of Data objects or a tabular DataFrame. If both types are supported, you can set the format near the component’s output port in the visual editor.

The exception to this pattern is the Vectara RAG component, which outputs only an answer string in Message format.

Vector store instances

Vector Store components to drive the underlying vector search functions. In the component code, this is often instantiated as vector_store, but some components use a different name, such as the provider’s name.

For the Cassandra Graph and Astra DB Graph components, vector_store is an instance of graph vector store.

These instances are provider-specific and configured according to the component’s parameters. For example, the Redis component creates an instance of RedisVectorStore based on the component’s parameters, such as the connection string, index name, and schema.

Some LangChain classes don’t expose all possible options as component parameters. Depending on the provider, these options might use default values or allow modification through environment variables, if they are supported in Robility flow. For information about specific options, see the LangChain API reference and provider documentation.

Vector Store Connection ports

The Astra DB and OpenSearch components have an additional Vector Store Connection output. This output can only connect to a VectorStore input port, and it was intended for use with dedicated Graph RAG components.

The only non-legacy component that supports this input is the Graph RAG component, which was meant as a Graph RAG extension to the Astra DB component. Instead, you can use the Astra DB Graph component that includes both the vector store connection and Graph RAG functionality. OpenSearch instances support Graph traversal through built-in RAG functionality and plugins.